For Live Training Register for Seminars Here or call 877-978-7246

Show nav

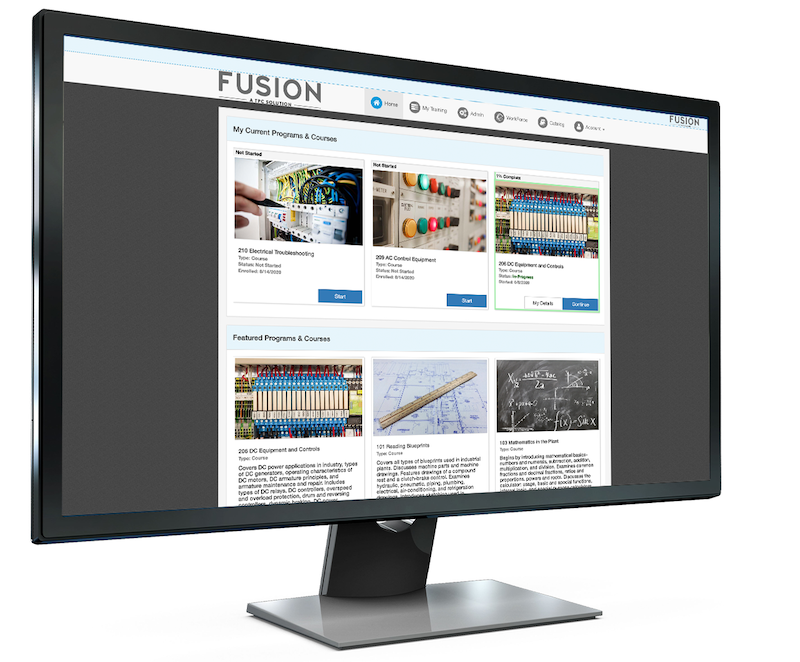

- Online Training

- Instructor-Led Training

- Performance

- Compliance

- TECHNOLOGY

- Industries

- Resources

- Contact

-

Register

Register now

If you would like to register with TPC Training click here or contact us on 877-978-7246

-

Websites

Select site: